Twenty Years after Andrew—How Far Have We Come?

Aug 23, 2012

August 23, 2012

Editor's Note: On the twentieth anniversary of the storm that changed the insurance industry forever, AIR Senior Vice President of Business Development Bill Churney and Senior Editor Nan Ma reassess the value of catastrophe modeling.

Within hours of Hurricane Andrew's landfall in south Florida in late August of 1992, AIR Worldwide estimated that insured losses could reach as high as USD 13 billion. Even as insurers were grappling with what they recognized as an unprecedented situation, the reaction across the industry was resoundingly one of skepticism. For companies managing their risk based on historical experience, such a loss was simply unimaginable. Months later, long after the winds subsided, the final tally from ISO's Property Claim Services® came in at USD 15.5 billion. But the real storm was just beginning.

Andrew, the first of only four hurricanes that season, became a defining event in the modern history of insurance risk management. If loss potential was so grossly underestimated in the country's most hurricane-prone state, what other unknown risks lurked? As a five-year old company offering an alternative to the long-standing legacy of traditional actuarial techniques, AIR did not have all the answers. But in creating the U.S. hurricane model that founded the catastrophe modeling industry, AIR introduced a novel framework1 for managing the potential for extreme losses.

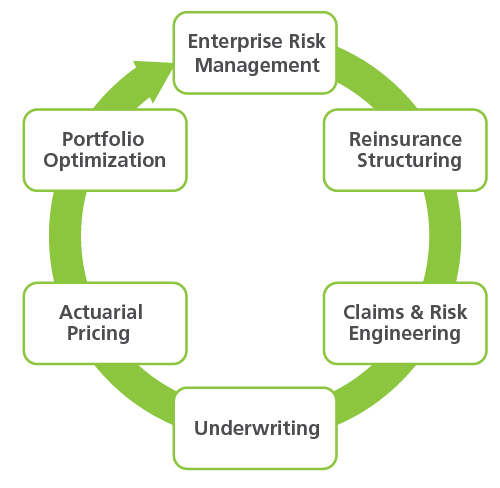

Catastrophe modeling became the new paradigm after Hurricane Andrew; AIR's scope expanded from that single model when the company was founded in 1987 to a truly global vision in 2012 that spans over 90 countries and counting. On the twentieth anniversary of Hurricane Andrew, as analytics are being used more than ever to inform a wide range of business decisions, we take a critical look at the value of catastrophe modeling and how far the industry has come.

Before Andrew

The origins of modern property-casualty insurance2 can be traced to 17th century England, after a massive conflagration in London in 1666 prompted the formation of the first fire mutual companies, with the United States following suit in the 18th century. During the industrial boom, devastating large-scale urban fires (including in New York in 1835, Chicago in 1871, and Boston in 1872) bankrupted many insurance companies and compelled them to seek objective approaches for selecting policies and pricing risk.

To assess liability in urban areas, companies consulted fire insurance maps like those published by the Sanborn Map Company starting in the late 1800s, which displayed information like building footprints, construction material, and proximity to other buildings. Insurers would also mark the locations of their underwritten properties on a map using pins to avoid having a great number of properties wiped out by a single fire. This practice of using pin maps continued when insurance coverage expanded to hurricane and other windstorms in the 1930s. These maps, although primitive by today's standards, upheld a certain level of underwriting discipline and helped to prevent excessive concentrations of exposure along hurricane-prone stretches of the coast and in areas often struck by tornadoes.

The absence of major catastrophe losses3 and the time-consuming nature of pin mapping meant that this practice largely fell out of favor by the 60s and 70s. There was a sense of complacency about catastrophe risk, and many companies relied on their limited historical claims experience to estimate potential losses. Meanwhile, coastal development continued at a rapid pace, with the population density of the southeast Atlantic coast increasing by 75% in the two decades between 1970 and 1990.4As computing power increased in the 1980s, some companies adopted more advanced analytics by conducting deterministic modeling of a small set of high impact scenarios against their portfolios of properties. Scenario testing is relatively simple to execute and conceptually easy to understand. Furthermore, the results are easy to communicate and give regulatory and ratings entities a common means to make comparisons across enterprises.

However, basing risk management strategies on specific events, either hypothetical or historical, creates blind spots to alternative scenarios that could produce similar or even greater losses. Even with the use of deterministic modeling and more sophisticated forms of exposure concentration management, the view of hurricane risk in the United States was incomplete and out of date; rates were insufficient and potential losses were significantly underestimated.

The Storm that Changed Everything

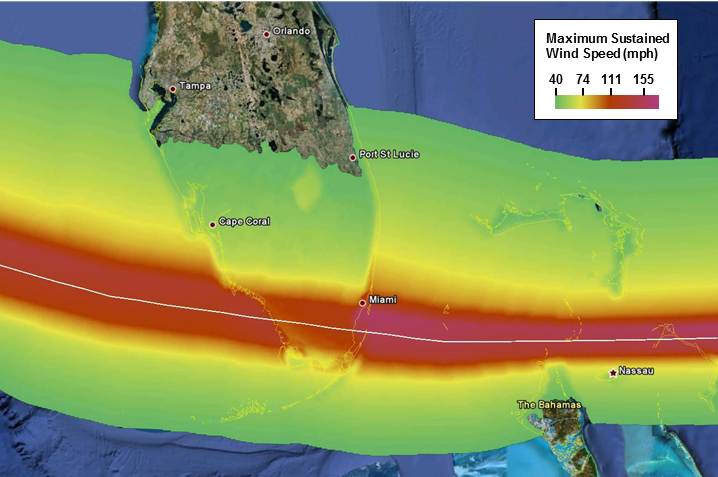

Hurricane Andrew struck Homestead, Florida, on August 24th, 1992, as a Category 55 storm with violent winds of up to 167 mph and a massive 17-foot storm surge. While it was a small storm, it caused extreme destruction along its track in the four hours it took to traverse the state. Andrew then entered the Gulf of Mexico, where it caused an estimated half a billion dollars of damage to offshore oil and gas structures, before making another landfall in Louisiana at Category 3 strength. In total, Andrew damaged or destroyed more than 125,000 homes and 80,000 businesses, and agricultural losses totaled more than USD 1 billion (in 1992 currency). Economic losses in the U.S. were estimated at close to USD 25 billion, 15.5 billion of which was insured.

Hurricane Andrew contributed to the insolvency and closure of 11 insurance companies and drained excessive equity from some 30 more. Apart from the difficulty in settling an unprecedented number of claims, the event placed other extreme stresses on the property/casualty market, some of which remain today. Rates and deductibles rose sharply, policies were canceled or declined for renewal, and some national primary insurers attempted to withdraw entirely from the Florida market. To protect homeowners, Florida legislators instituted measures to limit how abruptly companies could reduce their market shares in Florida.

Meanwhile, a state-run insurer of last resort, which eventually became the Citizens Property Insurance Company, was set up to address the widening shortfall in the availability of affordable coverage to homeowners and businesses. Citizens is now the largest property insurer in the state, but its reserves are likely not sufficient to pay claims in the event of a large storm. Even though a state reinsurance fund has been set up as well, tax assessments will be needed to make up the difference.

Reinsurance markets reacted, too, by raising prices and contracting operations in Florida. In turn, the industry had to seek other ways of increasing reinsurance capacity, which in recent years has been driving the development of insurance-linked securities that transfer risk to the capital markets. In addition, ratings agencies, policymakers, and boards of directors became acutely concerned about the impact of major catastrophes on reserves, earnings, and solvency. They required companies to demonstrate capital adequacy and robust risk management processes and to report estimated catastrophe losses.

These are just some of the still-evolving changes to the insurance market that were ignited by Hurricane Andrew. Suffice it to say, the industry quickly recognized that there were severe limitations in relying on historical loss data, either alone or in conjunction with exposure concentration management and deterministic modeling. The central missing piece is a quantification of the frequency and severity of potential events. To understand the impact of catastrophes, it is essential to understand the catastrophes themselves, which is something that only probabilistic modeling can provide.

Back to Fundamentals

The catastrophe modeling framework that AIR Worldwide established in 1987 remains essentially unchanged to this day. The goal then, as it is now, was to first provide a realistic and robust view of the hazard and then to estimate the potential impact on exposed properties based on objective engineering principles.

Long before Andrew struck, AIR recognized that hurricane losses were severely underestimated in the United States. AIR's stochastic catalog contained scenarios for hurricanes hitting Florida that incurred more than USD 40 billion in insured losses;6 no other source was providing this type of information to the industry at that time. Based on what AIR knew about Atlantic hurricane activity and the exposures at risk, the final tally from Andrew fell well within AIR's expectations.7

In part because of the widespread adoption of catastrophe modeling after Andrew, the industry has become much better equipped to handle extreme losses. After the record-breaking 2004 and 2005 seasons, only three very small Florida-based companies became insolvent, and none were attributed to Hurricane Katrina. The industry also seems to have survived the large global losses of 2011 intact. Yet with those recent large loss catastrophes and other upheavals in the market, models are once again under increased scrutiny. There has been criticism within the industry that models miss the mark too often and there has been skepticism about the science behind model updates.

A British statistician, George Box, made the famous remark that "all models are wrong, but some are useful." We do not necessarily disagree. The accuracy of a catastrophe model is bounded by the limits of human knowledge, which will never be perfect. AIR's models merely represent the current understanding of complex physical phenomena which, as it improves and evolves, will make models more detailed and sophisticated. In the end, catastrophes cannot be predicted and the pitfalls of relying on past history or preparing for a selected set of events are all too apparent; disciplined risk management must take the long view. Probabilistic modeling is the only tool currently available that provides a comprehensive and robust perspective on possible losses.

1 AIR founder Karen Clark first made the argument for probabilistic modeling in her seminal 1986 paper, "A Formal Approach to Catastrophe Risk Assessment and Management," Proceedings of the Casualty Actuarial Society. Vol LXXIII, no. 139 (http://www.casact.org/pubs/proceed/proceed86/86069.pdf)

2 The actual practice of insurance can be traced back to maritime commerce in ancient times, as merchants, ship owners, and investors wanted to secure the value of vessels and cargo against piracy and shipwreck.

3 Until Hurricane Hugo in 1989, no disaster in the world had cost insurers more than one billion dollars.

4 ISO, "The Impact of Catastrophes on Property Insurance," 1994 (http://www.iso.com/Research-and-Analyses/Studies-and-Whitepapers/The-Impact-of-Catastrophes-on-Property-Insurance.html)

5 Hurricane Andrew was originally classified as a Category 4 storm, but was later upgraded by the National Hurricane Center to Category 5. For an explanation of the reanalysis, please refer to http://www.air-worldwide.com/Publications/AIR-Currents/2012/A-Note-on-the-Reanalysis-of-Hurricane-Andrew%E2%80%99s-Intensity/

6 AIR Worldwide, CATMAP documentation, 1992

7 AIR estimates that Hurricane Andrew would result in losses of over USD 56.7 billion were it to strike today.